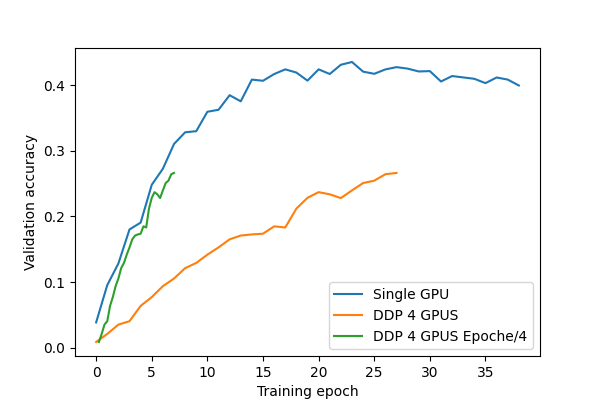

PyTorch multi-GPU training for faster machine learning results :: Päpper's Machine Learning Blog — This blog features state of the art applications in machine learning with a lot of PyTorch samples and

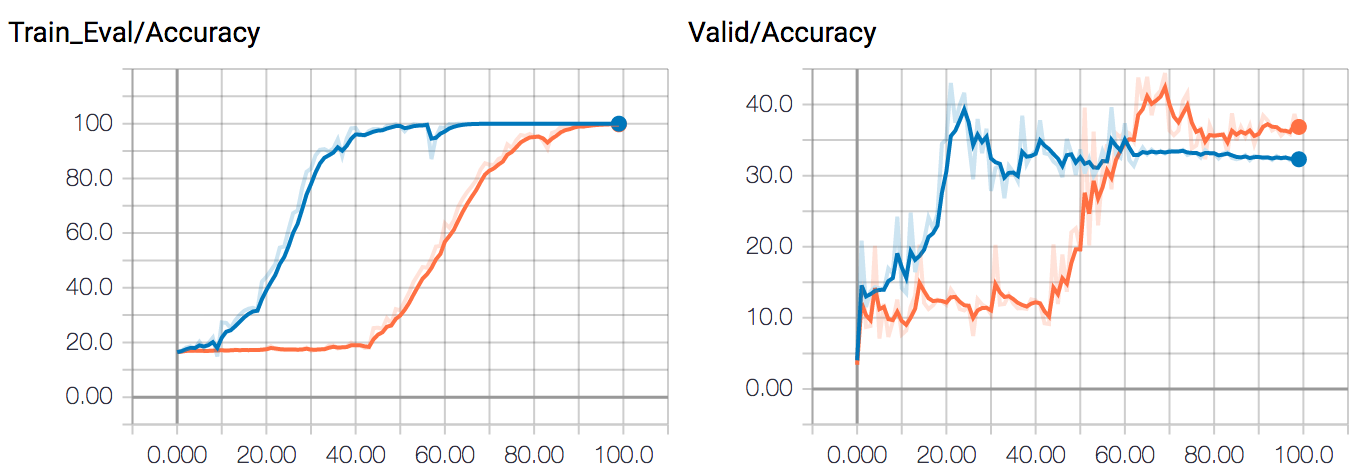

Validating Distributed Multi-Node Autonomous Vehicle AI Training with NVIDIA DGX Systems on OpenShift with DXC Robotic Drive | NVIDIA Technical Blog

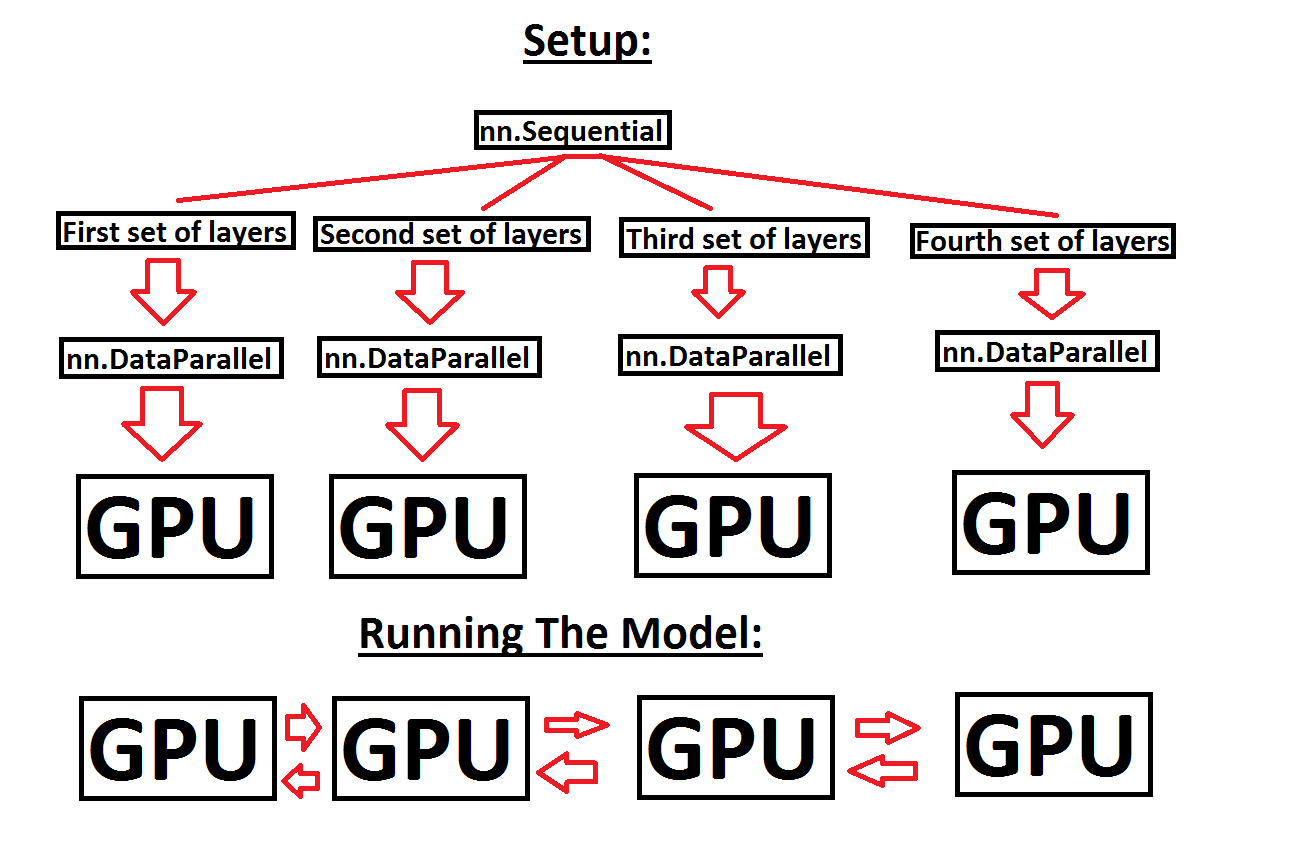

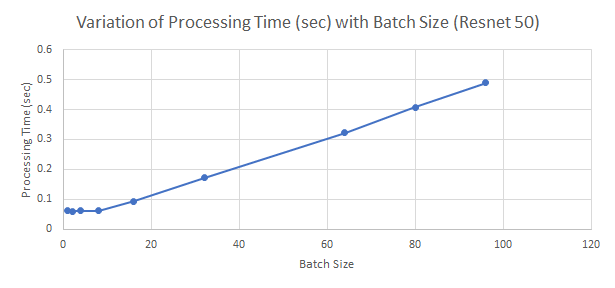

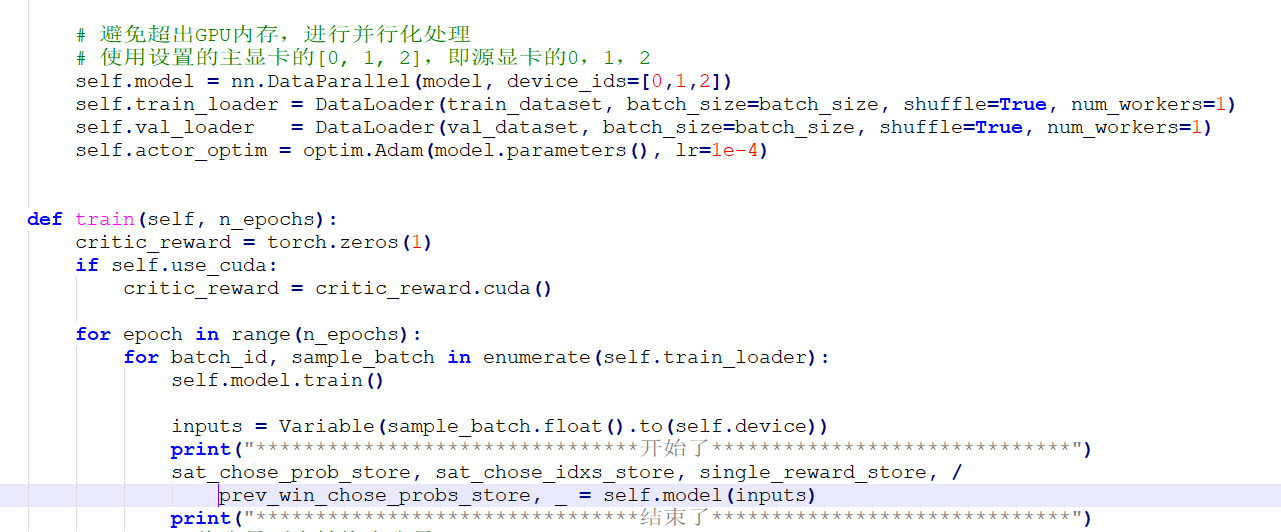

Learn PyTorch Multi-GPU properly. I'm Matthew, a carrot market machine… | by The Black Knight | Medium

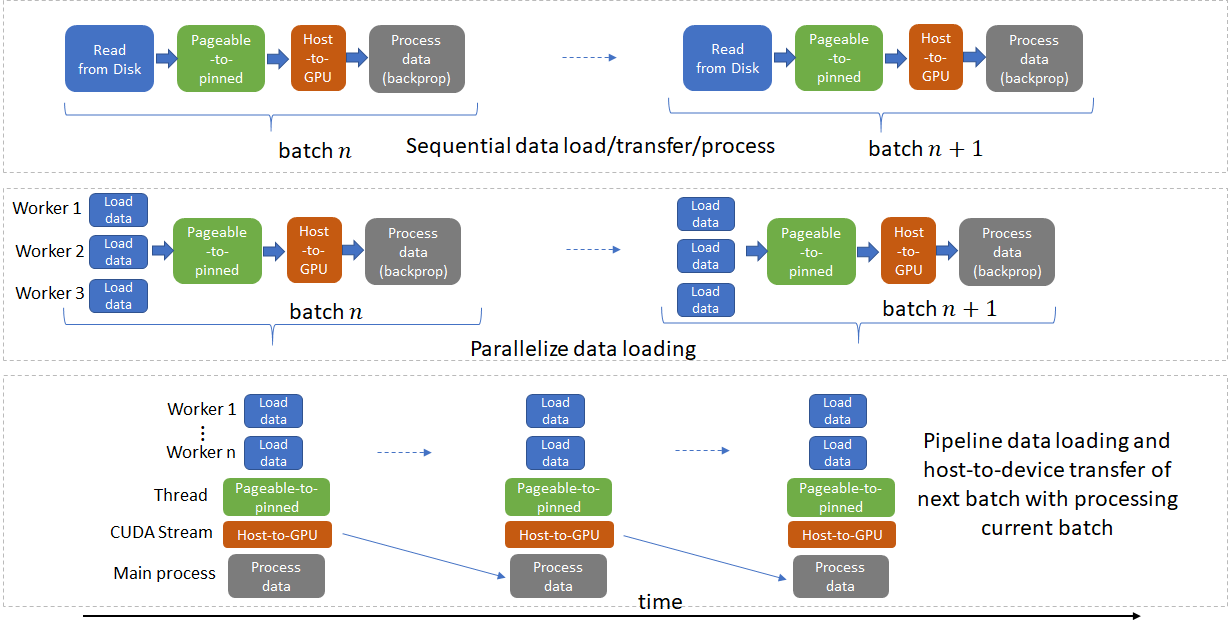

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer